Unlimited & Free Concurrency.

One of the main benefits of using Terraform is the speed at which engineers can develop code and deploy it. A single module can be used to make changes across thousands of resources impacting hundreds of applications. The problem is that Terraform is only as fast as the system it is running on.

Not only is it impacting the speed at which resources can be delivered, but it also impacts the developer experience. As a developer, you might be under pressure to get changes out the door as fast as possible during a maintenance window or incident and the last thing you want is to be throttled by the concurrency limits of the system that is deploying Terraform

Every Scalr account automatically gets five concurrent runs when subscribing to a paid plan in Scalr. While we would love to give everyone unlimited run concurrency, we have to have controls in place to avoid abuse of the system, one of our core principles. The concurrency limits included the runs executing on self-hosted agents, which really couldn’t have abused the core of Scalr.io.

Being that that above statement somewhat conflicted, we decided to make changes to how we handle concurrency with self-hosted agents.

We decoupled the concurrency applied on the scalr.io runners with that of the self-hosted agents. Each agent can have a maximum of 5 concurrent runs at a time and our customers are in full control of how many agents they deploy.

For example, if you have 5 concurrent runs on the Scalr.io runners and you deploy 5 agents, you now have 30 concurrent runs at no extra cost.

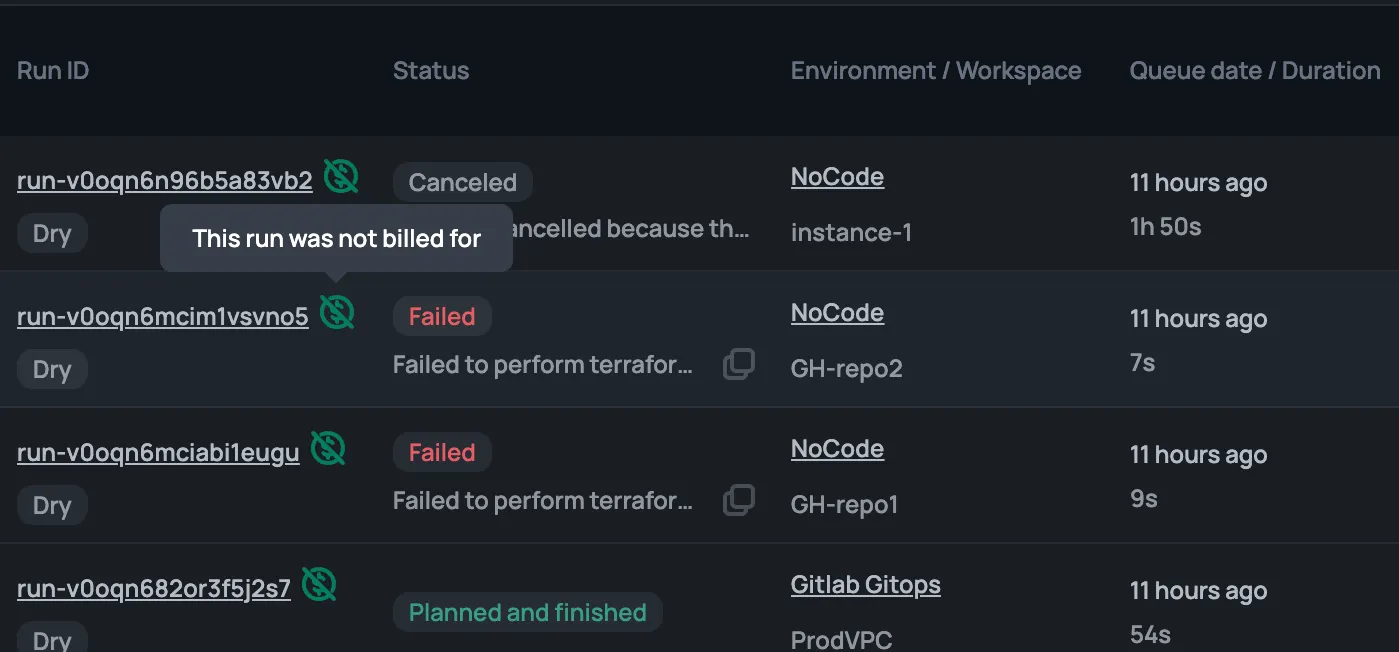

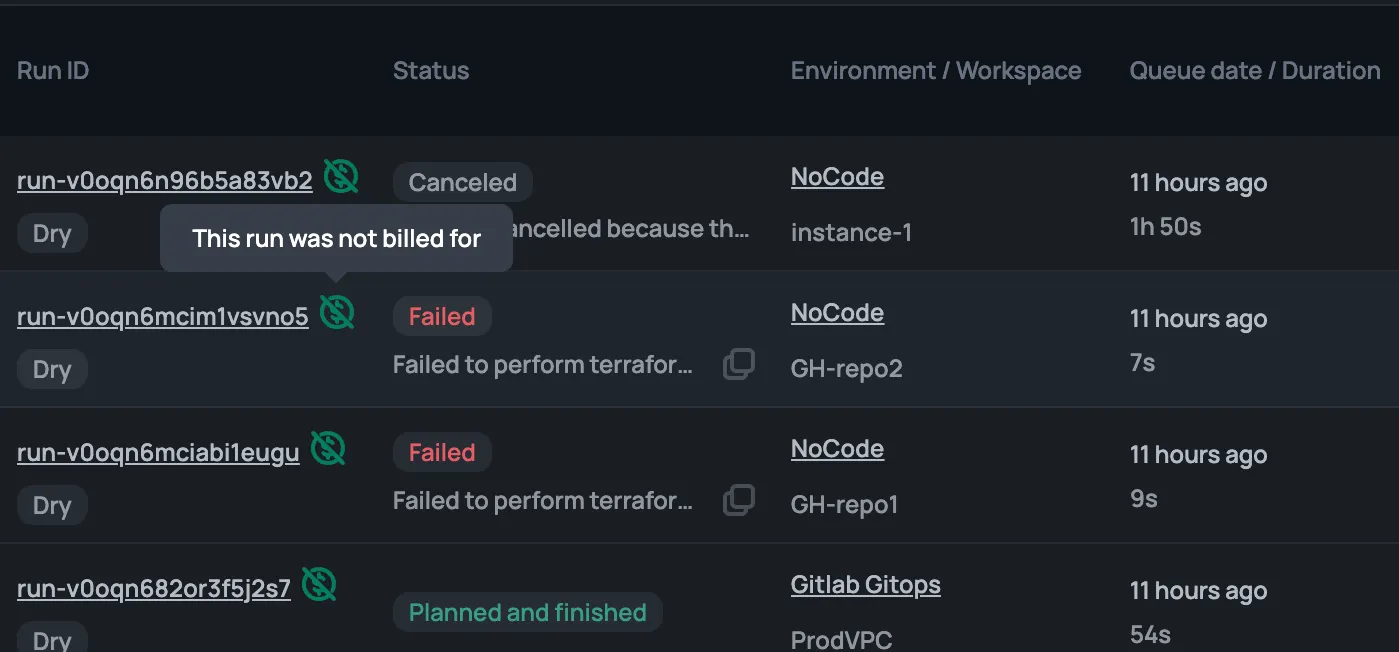

While we knew that this would have a positive impact on the developer experience, we didn’t expect how drastic it would be. Across our entire user base we saw the following happen:

Looking deeper into one of the most impacted customers, a large hardware retail store:

Scalr is a drop-in replacement for Terraform Cloud, please see the difference below in how the Scalr offering compares to TFC:

By giving the customers the ability to control their concurrency, they can deliver to their customers faster as well as improve their own developer experience. Don’t be limited by concurrency for no reason, sign up for Scalr and give it a try today.

Disclaimer: All information was accurate at of April 10th 2024, based on publicly available information