Unlimited & Free Concurrency.

This was written by Jack Roper, a guest blogger.

To get started using Scalr with Azure DevOps, there are 3 initial stages to go through. In this series of 3 posts on using Scalr with Azure DevOps, I will walk through each stage step-by-step.

This is the third and final post in the series that will focus on how to execute your Terraform code and create a workspace, both using PR automation, and by Plugging the CLI into Azure DevOps. Note, that there is a third way to create a workspace using the module registry as well, but this still uses the VCS connection.

If you missed the first post in the series, check it out here — picking a workflow.

And the second post here — how to add Azure credentials and link them to an environment.

In the first 2 posts, we linked our Azure DevOps instance and our Azure subscription to our Scalr environment. Let’s explore the current environment setup!

Selecting provider credentials will display our linked Azure account.

Selecting ‘VCS’ we see that our Azure DevOps project is linked.

Selecting variables will display the variables inherited from cloud credentials that were set up earlier.

The Terraform code contained on my Github page contains configuration files to add some groups to Azure Active Directory. You can download it here if you want to follow along! Feel free to follow me on GitHub!

https://github.com/jackwesleyroper/az-ad-group-module

The next port of call is to create a workspace. A workspace is where all objects related to Terraform managed resources are stored and managed.

1. Within the environment, select the ‘create workspace’ button.

2. We will be creating a workspace linked to our Azure DevOps VCS provider. Select it from the drop-down list, choose the appropriate repo the code is held in and the branch. Here you can specify the Terraform version, and auto-apply changes when a terraform plan run is successful if desired.

Enabling VCS-driven dry runs enables Scalr to automatically kick off a dry run when it detects a pull request opened against a branch.

The Terraform directory sets where Terraform will actually run from and execute commands. It is a relative path and must be a subdirectory of the top level of the repository or of the subdirectory if specified.

Runs can also be triggered on certain subdirectories only, for this option to be enabled the working directory needs to be set.

Lastly, the custom hooks option can be used to call custom actions at various stages in the Terraform workflow. These can be set before or after the plan stage, or before or after the apply stage.

3. Press create once all the options are filled out.

Notice that we will now need to set some variables. Scalr detects which variables in the Terraform code are required to be set (those that do not have values), and will automatically create them. Variables can be set in the Terraform code or the Scalr UI. For my example, I need to fill out the ad_group_names variable.

If your Terraform configuration requires the use of Shell variables these can also be added here. Note that the variables we used earlier to set up the cloud credentials for our Azure subscription are automatically inherited.

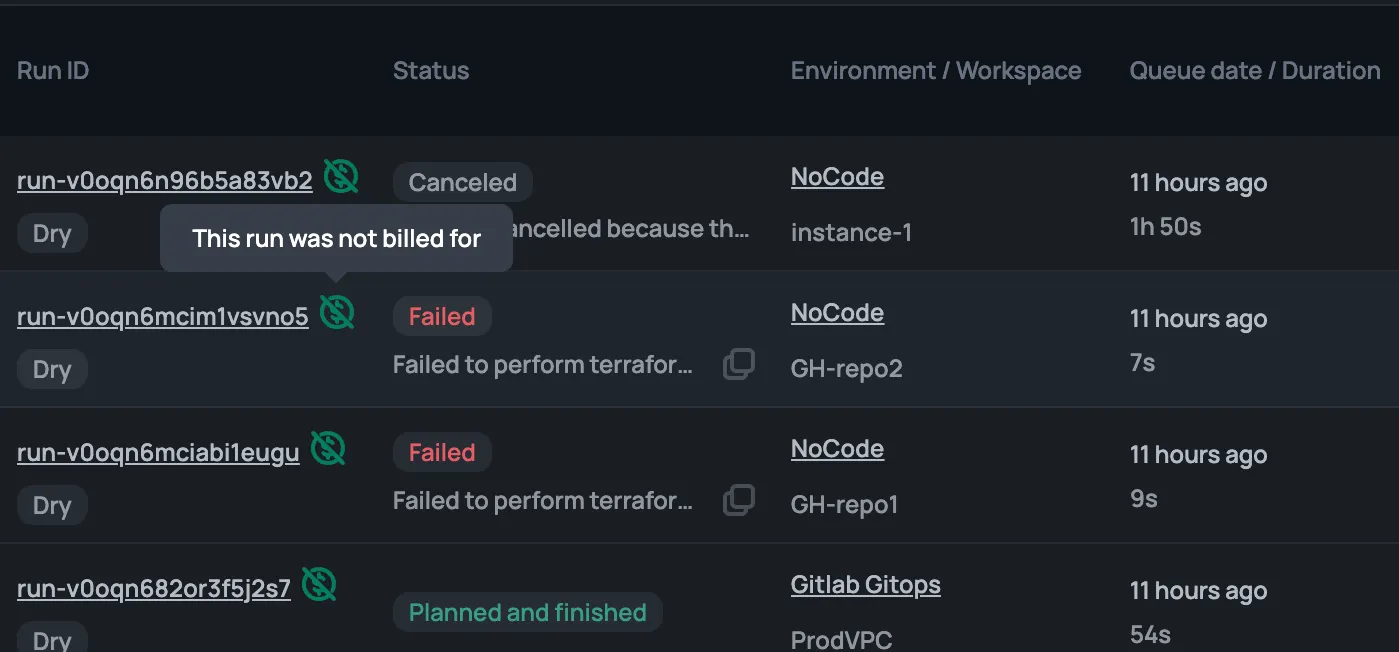

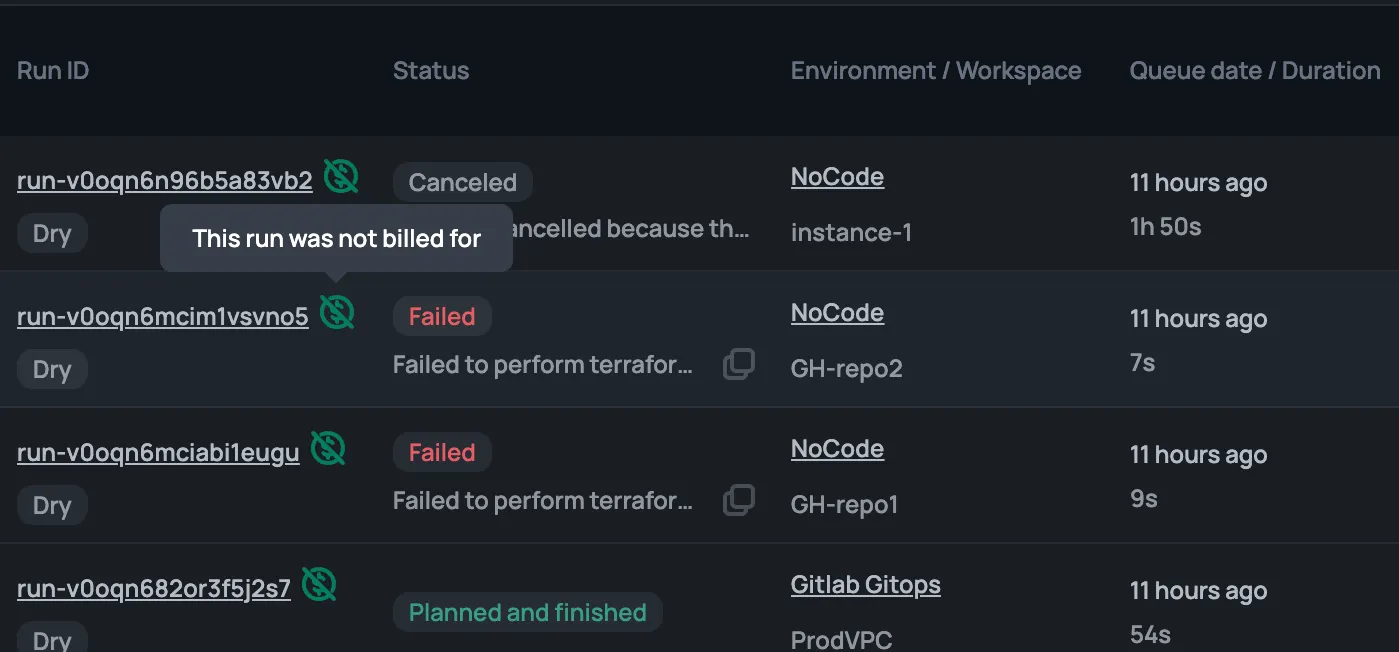

Now we have everything in place we can go ahead and run our code.

Navigate to Runs -> Queue Run, enter a reason for the run, and hit Queue run.

My first run errored! The custom hook that I set up earlier detected that my code was not formatted correctly and exited with error code 3. The check option I used exits with code 0 if the configuration is formatted.

-check Check if the input is formatted. Exit status will be 0 if allinput is properly formatted and non-zero otherwise.

Over in Azure DevOps, I also noticed an error message on my repo:

The run confirmed that my 3 groups would be created in AzureAD and that the configuration was formatted correctly. Over in Azure DevOps, I could see the plan in progress:

The ‘needs confirmation’ warning was displayed, at the bottom of the run screen I hit ‘approve’

Note the repository link and commit link will take you to the appropriate location in Azure DevOps.

The workspace runs through the following stages:

Plan, which allows users to view the planned creation, update, or destruction of resource through the standard console output or detailed plan view. The details plan will alert users of destructive changes and makes it easier to search the plan when there are many resources affected. It is also the section to check who approved an apply and comments associated with the approval.

Cost Estimate, which will show you the estimated cost for the resources that are being created. This information can be used for writing a policy to check cost

Policy Check, which is used to check the Terraform plan JSON output against Open Policy Agent policies. This step can be used to enforce you company standards.

Apply, which will actually create, update, or destroy the resources based on the Terraform configuration file.

Note that in my example, I was creating Azure AD Groups that do not incur any cost, so Scalr simply reported that it had detected no resources. Also, I had not defined any policies, so that stage was skipped.

Consider that I already had a workflow setup in Azure DevOps that used the Terraform CLI I wanted to use with Scalr. Scalr will execute the runs in a container on the Scalr backend, but the logs and output will be sent back to the console.

In post 1, we showed how to obtain an API token to utilize Scalr as a remote backed using the local Terraform CLI. This was done interactively. When using Azure DevOps Pipeline, this is an automated process so the login stage needs to be automated and not interactive.

1. Generate an API token. If you didn't save the one generated in the first article, go ahead and generate a new one from the Scalr UI.

2. Create a new workspace. Click on New workspace, then choose CLI. Configure the required options and press create.

3. Click on ‘base backend configuration’.

This will show the configuration needed for the Terraform configuration to use Scalr as the remote backend. Update the configuration file to set Scalr as the remote backend.

4. Next in order to connect to Scalr, we need to provide the API token. This must be a user token or a team token, and cannot be an organization token according to the Terraform docs.

In Azure DevOps, under Pipelines -> Library -> Add a new variable group.

Add the token as a secret value, with scalr-api-token as the name.

Note that ‘Run’ variables are currently not supported when using a remote backend (i.e. you cannot use the -var=<variable> or -var-file=<file> options. If you specify these you will receive this error:

The “remote” backend does not support setting run variables at this time. Currently the only to way to pass variables to the remote backend is by creating a ‘*.auto.tfvars’ variables file. This file will automatically be loaded by the “remote” backend when the workspace is configured to use Terraform v0.10.0 or later.

On execution, you will see the pipeline run through each stage, also with the added bonus of giving you the cost estimation at the end of the apply stage.

In this final article in the series we have shown how to create a workplace, both VCS integrated and CLI-driven, and executed our Terraform code. We used an Azure DevOps pipeline to automate the workflow on the CLI-driven workspace, using Scalr as the remote backend.

Note that workspaces and variables can be created using the Scalr Terraform provider!

Cheers! 🍻