Unlimited & Free Concurrency.

HashiCorp Terraform has become the go-to tool for defining infrastructure as code (IaC), enabling teams to provision and manage cloud infrastructure across various cloud providers like AWS S, Azure, and Google Cloud with unprecedented efficiency. However, as Terraform projects grow in size and complexity, and as more team members contribute, new challenges emerge. Simply writing Terraform configuration files is no longer enough. This guide, aimed at fellow developers and DevOps teams, dives deep into best practices for using Terraform at scale, addressing common pain points and offering concrete strategies for success. The focus will be on structuring Terraform code, managing state effectively, streamlining Terraform operations through CI/CD, ensuring compliance, testing, and tackling performance bottlenecks.

The foundation of using Terraform effectively at scale lies in how the Terraform code is structured and organized. Poorly structured code can quickly become a maintenance nightmare, hindering collaboration and slowing down Terraform deployments.

A fundamental decision when scaling Terraform projects is whether to adopt a monorepo or a polyrepo strategy for the version control system.1

terraform-monorepo/

├── modules/ # Shared, reusable terraform modules

│ ├── vpc/

│ └── rds/

├── environments/ # Root modules per environment

│ ├── dev/

│ │ ├── networking/ # Component within dev

│ │ │ └── main.tf

│ │ └── app-db/

│ │ └── main.tf

│ ├── staging/

│ └── prod/

└── services/ # Root modules for different services

├── service-a/

│ └── main.tf

└── service-b/terraform_remote_state data sources. Discoverability of shared code might be reduced, and there's a risk of code duplication if not managed carefully.1The choice between these strategies often depends on factors like team size, organizational structure, the interconnectedness of infrastructure components, and the maturity of CI/CD tooling.1 It's not uncommon for organizations to evolve their repository strategy over time or adopt hybrid approaches. For instance, a central platform team might manage core, reusable Terraform modules in a monorepo, while application teams consume these modules from their application-specific polyrepos. The guiding principle should be that the repository structure facilitates, rather than hinders, an efficient Terraform workflow.

Table: Monorepo vs. Polyrepo for Terraform Projects

This table provides a high-level comparison. The "best" choice is contextual and may evolve. The easiest way to manage this evolution is through well-defined module interfaces and clear contracts, ensuring that changes in one part of the infrastructure have predictable impacts on others.

A root module is the entry point for Terraform—the directory where terraform apply is executed. It contains the Terraform configuration files that Terraform processes, including provider configurations, backend configurations, and calls to child modules.5

A critical best practice for scaling is to minimize the number of resources directly managed within a single root module, and consequently, within a single state file. Managing too many resources (e.g., more than a few dozen to 100) in one state can lead to slow Terraform operations like terraform plan and terraform apply due to the time taken to refresh the state of every resource.7 This directly addresses a common developer pain point: long waits for Terraform commands to complete.

A recommended directory structure separates service/application logic from environment-specific configurations 7:

-- SERVICE-DIRECTORY/

|-- modules/

| |-- <service-name>/ # Contains the actual reusable Terraform code

| |-- main.tf

| |-- variables.tf

| |-- outputs.tf

| |-- provider.tf # Defines required provider versions, not configurations

| |-- README.md

|-- environments/

|-- dev/

| |-- backend.tf # Remote state configuration for dev

| |-- main.tf # Instantiates modules/service-name with dev-specific variables

| |-- terraform.tfvars # Dev-specific input variables

|-- prod/

|-- backend.tf # Remote state configuration for prod

|-- main.tf # Instantiates modules/service-name with prod-specific variables

|-- terraform.tfvars # Prod-specific input variablesIn this structure, the main.tf within an environment directory (e.g., environments/dev/main.tf) becomes the root module for that environment. Its primary role is to instantiate the core service module (from modules/<service-name>) and provide environment-specific input variables. This approach ensures that root modules remain lean, acting as aggregators or orchestrators for different environments, rather than defining numerous resources themselves. This delegation of resource creation to child modules is key to keeping individual state files manageable and Terraform runs performant.

Reusable Terraform modules are fundamental to managing Terraform infrastructure at scale. They allow developers to encapsulate configurations for specific pieces of infrastructure (e.g., a VPC, a Kubernetes cluster, an auto scaling group) and reuse them across different environments and Terraform projects.6 This practice addresses the pain point of duplicating Terraform code and helps maintain consistency.

Principles of Good Module Design:

module "production_vpc" {

source = "terraform-aws-modules/vpc/aws"

version = "~> 3.14" // Use a specific version range

name = "production-vpc"

cidr = "10.0.0.0/16"

#... other input variables

}main.tf for resource definitions, variables.tf for input variable declarations, and outputs.tf for output value definitions.6 A comprehensive README.md file is crucial, explaining the module's purpose, inputs, outputs, provider requirements, and usage examples.6 Example configurations should be placed in an examples/ subdirectory.16required_providers block.15Example of required_providers in a module:terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = ">= 4.60.0" // Specify a minimum compatible version

}

}

required_version = ">= 1.3.0" // Specify minimum Terraform version

}terraform-<PROVIDER>-<NAME>.8modules/ subdirectory within the main module. These are typically considered private to the parent module unless explicitly documented otherwise.15Well-designed modules act as contracts. Their inputs are the terms, outputs are the deliverables, and versioning manages the evolution of this contract. This "contractual" nature allows teams to work independently and with confidence, which is indispensable for scaling Terraform operations.

Consistent naming and style are not merely aesthetic; they are vital for readability, maintainability, and collaboration in large Terraform projects.10

aws_instance.web_server), data source names, and variable names. Resource names themselves should generally be singular.16ram_size_gb).16enable_monitoring instead of disable_monitoring) to simplify conditional logic.16network.tf, compute.tf, loadbalancer.tf) instead of putting everything in one main.tf or creating a separate file for every single resource.16terraform fmt to ensure consistent code formatting. This should be enforced through pre-commit hooks and as a step in the CD pipeline.16 Consistent formatting reduces cognitive load and minimizes trivial merge conflicts.Investing in and enforcing clear naming conventions and code style is an investment in team productivity and the long-term health of the Terraform infrastructure codebase. It's an easy way to improve collaboration and reduce the learning curve for new team members.

The Terraform state file is the heart of any Terraform deployment, mapping declared resources to their real-world counterparts. At scale, managing this state correctly is critical to prevent corruption, ensure data integrity, and maintain performance.

Using local state files (terraform.tfstate stored on a developer's machine) is not viable for team collaboration or production environments. It leads to risks of data loss, corruption, and conflicts when multiple developers attempt Terraform operations simultaneously.9 This addresses the significant pain point of developers overwriting each other's changes or working with outdated state information.

The solution is to use a remote backend, which stores the Terraform state files in a shared, durable, and accessible single location. Popular choices include AWS S3 (often paired with DynamoDB for locking), Azure Blob Storage, Google Cloud Storage, or managed services like Terraform Cloud.10 Terraform Cloud notably offers free remote state management capabilities, including locking.13

Example S3 backend configuration:

terraform {

backend "s3" {

bucket = "our-company-terraform-state-prod" // Use a globally unique bucket name

key = "infra/core-network/terraform.tfstate" // Path to the state file

region = "us-west-2"

dynamodb_table = "our-company-terraform-locks-prod" // For state locking

encrypt = true // Always encrypt state at rest

}

}This configuration stores the state in an S3 bucket, uses a DynamoDB table for state locking to prevent concurrent modifications, and enables server-side encryption for the state file.13

State Locking is an indispensable feature provided by most remote backends. It ensures that only one terraform apply operation can modify a given state file at a time, preventing race conditions and state corruption.10 Successful state locking is indicated by predictable terraform apply behavior without concurrent modification errors.

Given that Terraform state files can contain sensitive information, security is paramount. Always enable encryption at rest for your chosen backend (e.g., encrypt = true for S3) and ensure that direct access to the backend storage is tightly controlled through IAM policies or similar mechanisms.11

A common pattern is to have unique backend configurations per environment. This is often achieved by placing a backend.tf file within each environment-specific directory (e.g., environments/dev/backend.tf, environments/prod/backend.tf), where the key or path within the storage bucket is parameterized to be unique for that environment.7 For instance, the dev environment's state might be stored at dev/terraform.tfstate and prod at prod/terraform.tfstate within the same bucket. This practice is fundamental to the proper management of Terraform state and ensures true isolation.

Terraform workspaces offer a mechanism to manage multiple instances of the same Terraform configuration using separate Terraform state files, all from a single set of Terraform files in a single location.1 For example, a developer might run terraform workspace new feature-x to create an isolated environment for testing a new feature, which will have its own state file distinct from default, dev, or prod.

The terraform.workspace interpolation sequence can be used within the Terraform code to introduce minor variations based on the currently selected workspace, such as changing instance sizes, the number of instances, or resource tags 25:

resource "aws_instance" "web_server" {

ami = "ami-0abcdef1234567890" // Example AMI

instance_type = terraform.workspace == "prod"? "m5.large" : "t2.micro"

count = terraform.workspace == "prod"? 5 : 1

tags = {

Name = "WebServer-${terraform.workspace}"

Environment = terraform.workspace

}

}This code snippet demonstrates how the instance type and count can differ between the prod workspace and others.

Best Practices for Terraform Workspaces:

.tfvars files (e.g., dev.tfvars, prod.tfvars) or environment variables for configuration differences, rather than embedding extensive conditional logic directly in .tf files.30Limitations and When NOT to Use Workspaces:

A critical point of contention and potential confusion arises when comparing the use of Terraform workspaces with directory-based environment segregation. While workspaces allow managing multiple states from a single codebase, they have a significant limitation: all workspaces within a single configuration directory share the same backend block configuration.7 This means that while the state file key can be made dynamic using terraform.workspace (e.g., key = "env/${terraform.workspace}/terraform.tfstate"), the underlying storage (like the S3 bucket name, region, and DynamoDB table for locking) remains the same for all workspaces managed by that configuration.

If environments require fundamentally different backend configurations (e.g., separate AWS accounts for dev and prod state storage, different encryption keys, or different regions for the backend itself), Terraform workspaces within a single directory are not appropriate. In such cases, directory-based segregation, where each environment has its own directory with a distinct backend.tf file, is the more robust and isolated approach.7 Google Cloud's best practices, for instance, explicitly advise against using multiple CLI workspaces for environment separation, favoring separate directories to avoid a single point of failure with a shared backend and to allow for distinct backend settings.7

Therefore, the choice depends on the required degree of isolation. For simple variations (e.g., dev/staging/prod within the same cloud account and with similar resource structures), workspaces might be an easy way if the backend key is parameterized. However, for strong isolation (different accounts, regions, or significantly different resource sets and technical requirements for state storage), directory-based segregation is superior. Many teams adopt a hybrid approach: directory-based segregation for major environments (like separate dev and prod account configurations) and potentially use workspaces within those for more granular, temporary, or feature-specific environments if the underlying infrastructure structure is identical.

A common pain point as Terraform projects scale is the performance degradation of terraform plan and terraform apply terraform runs. Managing the entire infrastructure in a single state file is a primary cause, as Terraform needs to refresh the status of every resource defined in that state during each operation.7 Large state files also increase the "blast radius"—the potential impact of an erroneous change or state corruption.25

Strategies for Splitting Terraform State Files:

vpc.tfstate, eks.tfstate, and app-service-a.tfstate.terraform_remote_state Data Source: When state is split, components often need to reference outputs from other components. The terraform_remote_state data source allows one Terraform configuration to access the output values from another, separately managed, remote state file.17Example using terraform_remote_state to access VPC outputs:data "terraform_remote_state" "network_prod" {

backend = "s3"

config = {

bucket = "our-company-terraform-state-prod"

key = "infra/core-network/terraform.tfstate" // Path to the network's state file

region = "us-west-2"

}

}

resource "aws_instance" "application_server" {

ami = "ami-0abcdef1234567890"

instance_type = "m5.large"

subnet_id = data.terraform_remote_state.network_prod.outputs.private_subnet_ids // Consuming output

//... other configurations

}Splitting state files significantly improves the performance of Terraform operations by reducing the number of resources Terraform needs to refresh and process for any given terraform plan or terraform apply.17 A key metric of success here is a noticeable reduction in terraform plan execution times after implementing state splitting.

However, there's a trade-off. While splitting state improves performance and reduces blast radius, excessive fragmentation can lead to a complex web of terraform_remote_state dependencies. This can make the overall architecture harder to understand and manage. Each terraform_remote_state lookup introduces a small overhead. Finding the right granularity—not too coarse, not too fine—is crucial. This often aligns with team boundaries, component independence, and differing rates of change. The goal is for teams to independently manage and deploy their infrastructure components without prohibitive plan/apply times, while keeping dependencies clear and manageable.

state import)Often, teams adopt Terraform after some cloud resources have already been created manually or by other tools. The terraform import command and, more recently, the import block (in Terraform 1.5+) allow these existing resources to be brought under Terraform management without needing to destroy and recreate them.11

terraform import <RESOURCE_ADDRESS_IN_CODE> <RESOURCE_ID_IN_CLOUD> requires the developer to first write the corresponding resource block in their Terraform configuration.21import Block: This newer approach, defined within the Terraform code, allows Terraform to help generate the configuration for the imported resource, making the process less error-prone and generally the easiest way.40Example import block for an S3 bucket:import {

to = aws_s3_bucket.my_existing_bucket

id = "name-of-the-pre-existing-s3-bucket"

}

resource "aws_s3_bucket" "my_existing_bucket" {

# Configuration will be populated by Terraform after import and plan

}terraform plan will show the configuration to be generated.40Pitfalls and Strategies for state import:

import command does not generate code, which is a manual and error-prone task.40 The import block significantly improves this.terraform plan immediately after an import operation to identify any discrepancies and then adjust the Terraform code to accurately reflect the desired state or to update the resource to match the code.40depends_on meta-arguments after import.import judiciously, with explicit approval, primarily when deleting and recreating existing resources would cause significant disruption.21 Once a resource is imported, it should be managed exclusively by Terraform to prevent further drift.The terraform import functionality should be viewed as a migration tool for bringing unmanaged infrastructure under Terraform's control, not as a routine mechanism to correct configuration drift caused by out-of-band manual changes. If frequent manual changes are occurring and then being "fixed" by import, it indicates a deeper process issue—such as inadequate access controls or emergency changes not being codified back into Terraform—that needs to be addressed. The state of the infrastructure should always be driven by the Terraform code.

Automating Terraform operations through a Continuous Integration/Continuous Delivery (CD pipeline) is non-negotiable for achieving consistency, speed, and safety at scale.

The fundamental Terraform workflow of Write -> Plan -> Apply is adapted for team collaboration when scaling 32:

terraform plan is automatically generated. The output of this plan is made available for review by team members. This crucial step allows for collaborative assessment of proposed infrastructure changes, risk evaluation, and error detection before any resources are altered.17 A key metric here is the number of potential issues identified and rectified during the plan review phase.terraform apply command is then executed, often automatically by the CD pipeline, to provision or modify the cloud infrastructure.32A Git repository serves as the single source of truth for all Terraform infrastructure code.9 Effective branching strategies (e.g., Gitflow, feature branches) are essential to manage concurrent development and isolate changes.11 All Terraform changes must go through a PR process, where automated checks, including terraform plan output, serve as pull request status checks.17

Conceptual CI/CD Pipeline for Terraform:

graph LR

A --> B{Create Pull Request};

B --> C[CI: Checkout Code];

C --> D[CI: terraform init];

D --> E[CI: terraform validate];

E --> F[CI: terraform fmt --check];

F --> G;

G --> H[CI: Policy Check (Open Policy Agent)];

H --> I[CI: terraform plan -out=tfplan];

I --> J;

J --> K{Team Review & Approve PR};

K -- Approved --> L;

L --> M;

M --> N;

N --> O;

O --> P[Cloud Provider: Update Infrastructure];This diagram illustrates a typical flow, integrating essential Terraform operations and checks into a VCS-driven pipeline.14

The terraform plan output generated during the PR stage acts as a "contract" for the intended infrastructure changes. Once this plan is reviewed and the PR is approved, the CD system must ensure that this exact plan (or an equivalent plan generated against the latest state if no drift occurred) is what gets applied. This is crucial for maintaining trust in the review process. Robust CD pipelines achieve this by saving the plan artifact from the PR stage and using that specific file for the terraform apply step.36 Platforms like Terraform Cloud or tools such as Atlantis often automate the management of this plan artifact lifecycle.

A well-structured CD pipeline automates key Terraform commands and incorporates various checks:

terraform fmt --check: Enforces consistent code formatting.16terraform validate: Catches syntax errors and basic configuration issues early.6terraform init -input=false: Initializes the working directory, downloading providers and configuring the backend, without interactive prompts.1terraform plan -out=tfplan -input=false: Creates an execution plan, saving it to a file named tfplan for later use, again without prompts.1terraform apply -input=false tfplan: Applies the saved plan. Alternatively, terraform apply -auto-approve can be used, but this should be done with extreme caution, especially in production environments, as it bypasses the final interactive confirmation.1To ensure the apply stage is consistent with the plan stage, the entire working directory (including the .terraform subdirectory created during init and the saved tfplan file) should be archived after plan and restored to the exact same absolute path before apply.36 The plan and apply stages must also run in identical environments (OS, CPU architecture, Terraform version, provider versions), often achieved using Docker containers.36

CI/CD Tool Examples:

plan on PRs and apply on merges to the main branch.22 Standard actions like actions/checkout@v4, hashicorp/setup-terraform@v2, and cloud-specific credential actions (e.g., aws-actions/configure-aws-credentials@v4 using OIDC for AWS) are commonly used.Example GitHub Actions step for terraform plan on a PR:#.github/workflows/terraform-plan.yml

name: 'Terraform Plan'

on: pull_request

jobs:

terraform:

runs-on: ubuntu-latest

permissions:

contents: read

pull-requests: write # To comment plan output

id-token: write # For OIDC authentication with cloud providers

steps:

- name: Checkout code

uses: actions/checkout@v4

- name: Configure AWS Credentials (OIDC Example)

uses: aws-actions/configure-aws-credentials@v4

with:

role-to-assume: ${{ secrets.AWS_OIDC_ROLE_ARN }} # ARN of the IAM role for GitHub Actions

aws-region: ${{ secrets.AWS_REGION }}

- name: Setup Terraform

uses: hashicorp/setup-terraform@v2

with:

terraform_version: 1.7.0 # Specify your desired Terraform version

- name: Terraform Init

run: terraform init -input=false

- name: Terraform Plan

id: plan

run: |

terraform plan -no-color -input=false -out=tfplan

# Additional steps can be added here to format and comment the plan output to the PR

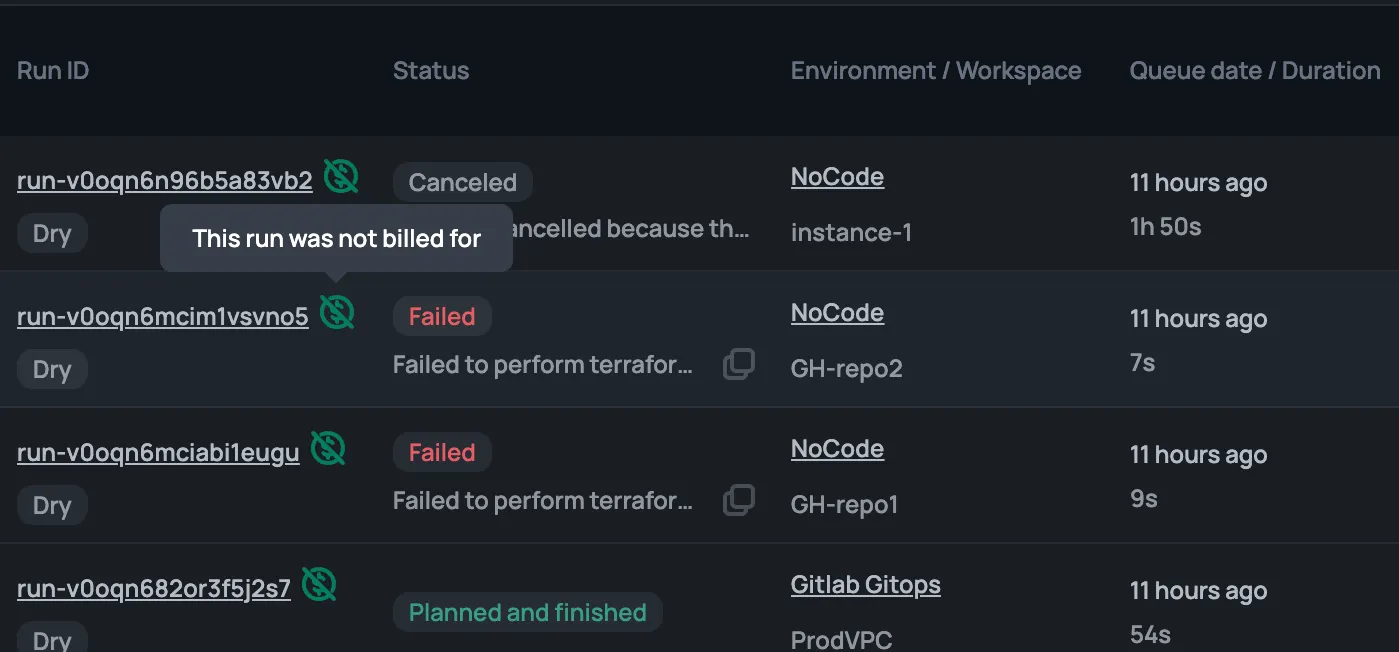

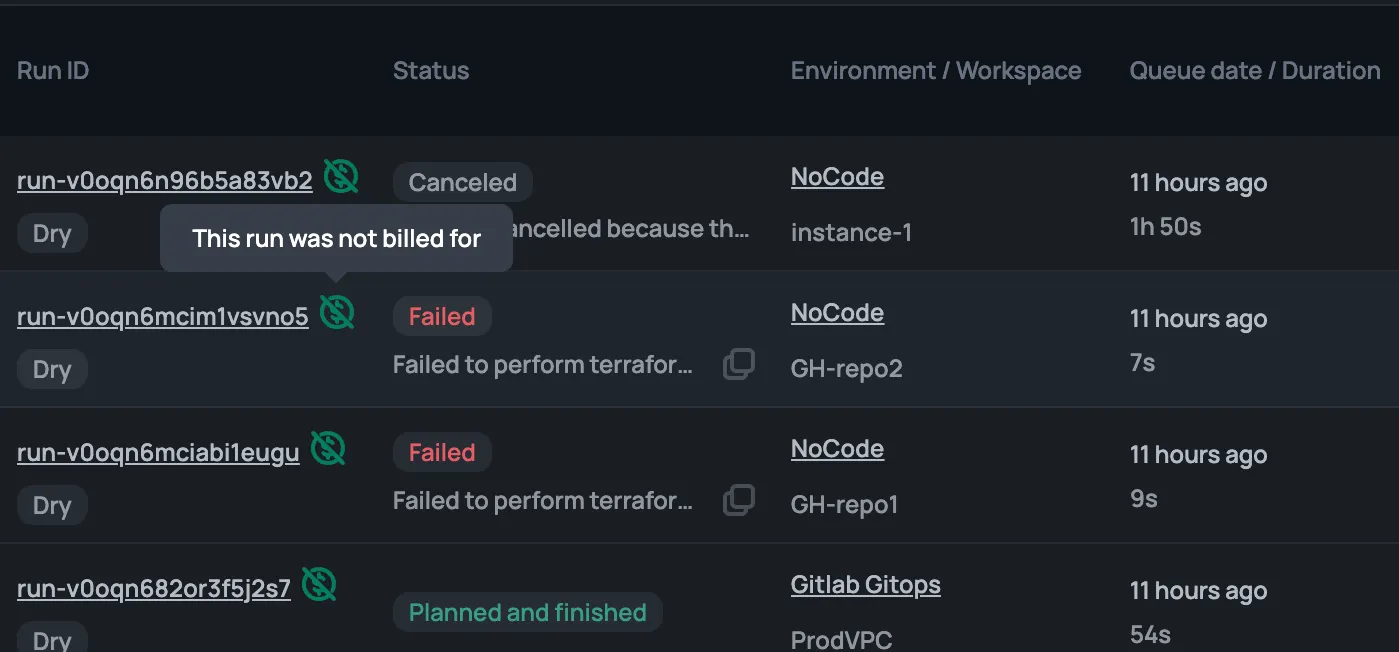

# For example, using 'actions/github-script' or tools like 'tfcmt'.gitlab-ci.yml and often leverages GitLab's built-in Terraform templates (e.g., Terraform/Base.gitlab-ci.yml) for stages like fmt, validate, build (init), and deploy (plan & apply).48 Credentials are managed as CI/CD variables.sh steps to execute Terraform binary commands or uses the Terraform plugin. Stages typically include checkout, init, validate, plan, and apply.47 Credentials can be managed via Jenkins Credentials Manager or IAM roles if Jenkins runs on EC2.While generic CI/CD tools are adaptable, specialized IaC platforms like Terraform Cloud, Spacelift, Scalr environments, and env0 offer built-in features that streamline many of these scaled Terraform operations. They often provide managed remote execution backends, sophisticated state management, integrated policy checks, collaboration features, and a user interface for reviewing Terraform runs.1 These platforms can significantly reduce the custom scripting and maintenance overhead associated with building these capabilities from scratch using generic CI/CD tools, offering an easiest way to implement many best practices.

Managing secrets (API keys, passwords, certificates) securely within a CD pipeline is critical to prevent exposure.11

data "aws_secretsmanager_secret_version" "api_key" {

secret_id = "my_app/api_key"

}

resource "some_service_resource" "example" {

api_token = data.aws_secretsmanager_secret_version.api_key.secret_string

}sensitive = true to prevent them from being displayed in CLI outputs or logs.17Success in secrets management is indicated by no hardcoded secrets and tightly controlled, audited access to sensitive information.

Understanding the financial impact of infrastructure changes before they are applied is a crucial aspect of scaling Terraform responsibly.13

terraform plan output and provide a breakdown of potential cost changes.17By making cost implications a first-class citizen in the PR review process, teams are empowered to make more cost-aware decisions, reducing the likelihood of budget overruns. This proactive approach is far more effective than reactive bill analysis.

As infrastructure scales, maintaining security and compliance becomes increasingly complex. Automation is key.

Open Policy Agent (OPA) is an open-source policy engine that allows organizations to define and enforce policies as code using a declarative language called Rego.1 For Terraform, OPA evaluates policies against a JSON representation of the terraform plan.16 This allows for compliance checks related to security standards, naming conventions, resource restrictions (e.g., allowed instance types or regions), and tagging requirements before any infrastructure is deployed.

OPA checks should be integrated as a step in the CD pipeline, typically after terraform plan. If policy violations are detected, the pipeline should be halted before terraform apply can proceed.16

Example OPA Policy in Rego (Enforce 'Environment' tag):

package terraform.policies.tagging

import input.plan.resource_changes

deny[msg] {

# Iterate over all resource changes in the plan

r := resource_changes[_]

# Check if the resource type is one that should be tagged (customize as needed)

# For simplicity, this example applies to all resources with tags

r.change.after.tags_all == null # Check if tags_all is null (no tags at all)

msg := sprintf("Resource '%s' is missing all tags. Required tags include 'Environment'.", [r.address])

}

deny[msg] {

r := resource_changes[_]

# Ensure tags_all is not null before trying to access a specific tag

r.change.after.tags_all!= null

not r.change.after.tags_all.Environment # Check if 'Environment' tag is missing

msg := sprintf("Resource '%s' is missing required tag 'Environment'.", [r.address])

}

deny[msg] {

r := resource_changes[_]

r.change.after.tags_all!= null

r.change.after.tags_all.Environment == "" # Check if 'Environment' tag is empty

msg := sprintf("Resource '%s' has an empty 'Environment' tag.", [r.address])

}This Rego policy checks if resources in the terraform plan are missing the 'Environment' tag or if it's empty.39 More sophisticated policies can check for specific values, allowed instance types (e.g., ensuring no overly large EC2 instances in dev), or that S3 buckets do not have public read ACLs.39

Tools like conftest can be used to test Terraform plans against OPA policies locally or in a CI pipeline.16 Terraform Cloud and other IaC management platforms also offer native support for OPA or Sentinel (HashiCorp's own policy as code framework).16

Integrating Open Policy Agent shifts compliance and security checks "left," making them a proactive part of the development lifecycle. This significantly reduces the risk of deploying non-compliant or insecure infrastructure and empowers developers with immediate feedback, improving both development velocity and security posture. Automated policy-based decisions become an integral part of the Terraform workflow.

Static analysis tools scan Terraform files for potential issues without executing them.

tflint: A popular linter that checks for provider-specific errors, deprecated syntax, and enforces best practices.17tfsec and checkov: These open-source tools focus on security, scanning Terraform configurations for misconfigurations that could lead to vulnerabilities.11These tools should be integrated into both local development workflows via pre-commit hooks and as early stages in the CD pipeline.11 This provides fast feedback to the software developer and acts as an automated quality gate. This approach reduces the burden on human reviewers and accelerates the learning process for developers.

Thorough testing is essential to ensure that Terraform infrastructure code behaves as expected and doesn't introduce regressions.

The testing pyramid concept applies to IaC: start with cheaper, faster tests and move towards more comprehensive, slower ones.53

terraform plan output to verify that the module would configure resources correctly based on given inputs, without actually deploying them. Terraform v1.6 introduced a native testing framework using .tftest.hcl or .tftest.json files, which supports mocking providers for true unit tests that don't require live cloud services.95Example of a .tftest.hcl for unit testing a module's plan:# modules/aws_s3_custom_bucket/tests/main.tftest.hcl

variables {

bucket_name = "unit-test-bucket"

enable_versioning = true

lifecycle_rule_ids = ["delete_old_versions"]

}

# Mock provider for AWS S3 to avoid actual API calls

mock_provider "aws" {

mock_resource "aws_s3_bucket" {

default_values = {

arn = "arn:aws:s3:::mock-bucket" # Provide expected computed values if needed

}

}

}

run "s3_bucket_plan_validation" {

command = plan // This tells Terraform to only run a plan, not apply

assert {

condition = module.s3_bucket.versioning_enabled_status == "Enabled"

error_message = "S3 bucket versioning should be planned as 'Enabled'."

}

assert {

condition = length(module.s3_bucket.lifecycle_rules) > 0

error_message = "S3 bucket should have lifecycle rules planned."

}

}command = plan and a (simplified) mock_provider block to validate module logic without actual deployment.95terraform apply, makes assertions against the live infrastructure (e.g., checking an S3 bucket's properties, making HTTP requests to a deployed load balancer, SSHing into an instance), and then runs terraform destroy.6A conceptual Terratest snippet in Go:package test

import (

"testing"

"github.com/gruntwork-io/terratest/modules/aws"

"github.com/gruntwork-io/terratest/modules/terraform"

"github.com/stretchr/testify/assert"

)

func TestS3BucketModule(t *testing.T) {

t.Parallel()

awsRegion := aws.GetRandomStableRegion(t, nil, nil)

uniqueId := random.UniqueId()

bucketName := fmt.Sprintf("terratest-s3-%s", uniqueId)

terraformOptions := terraform.WithDefaultRetryableErrors(t, &terraform.Options{

TerraformDir: "../examples/s3_bucket_example", // Path to example using the module

Vars: map[string]interface{}{

"bucket_name": bucketName,

"enable_versioning": true,

},

EnvVars: map[string]string{"AWS_DEFAULT_REGION": awsRegion},

})

defer terraform.Destroy(t, terraformOptions) // Ensure cleanup

terraform.InitAndApply(t, terraformOptions)

// Assertions:

// Check if the bucket exists

aws.AssertS3BucketExists(t, awsRegion, bucketName)

// Check if versioning is enabled

actualVersioningStatus := aws.GetS3BucketVersioning(t, awsRegion, bucketName)

assert.Equal(t, "Enabled", actualVersioningStatus)

}rspec-terraform, Goss, and awspec.124The act of writing tests often drives better module design. To make modules testable, developers are naturally encouraged to create focused components with clear input/output interfaces, leading to higher-quality and more reusable Terraform modules.

End-to-end tests validate that the entire deployed system, composed of multiple Terraform modules, functions correctly for a specific application or service.53 This typically involves deploying all constituent modules into a dedicated test environment and then running application-level tests or specific infrastructure checks to ensure components are correctly integrated (e.g., a web application can connect to its database, traffic flows through the load balancer to the target group and auto scaling group correctly). While costly and time-consuming, these tests provide the highest confidence that the overall Terraform "blueprint" for an application's infrastructure is sound and fit for its intended use case.

As Terraform projects and the infrastructure they manage grow, terraform plan and terraform apply times can increase, and debugging errors can become more complex.

-target): The terraform plan -target=resource_address or terraform apply -target=resource_address flags can limit operations to specific resources or modules. This is useful for quick fixes or debugging isolated parts of a large configuration but should be used with extreme caution. Over-reliance on -target can lead to the Terraform state files becoming inconsistent with the actual deployed infrastructure (state drift), as untargeted resources are not considered or updated.17-refresh=false): terraform plan -refresh=false skips the step where Terraform queries the cloud providers to update the state file with the current status of resources. This can significantly speed up plan generation if one is certain that no out-of-band changes have occurred. However, it's risky because if the actual infrastructure has drifted from the state file, the plan will be based on stale information.35-parallelism=n): Terraform performs operations like resource creation, update, and deletion in parallel by default (typically 10 concurrent Terraform operations). The -parallelism=n flag can adjust this. Increasing it might speed up Terraform runs, but it can also lead to hitting API rate limits imposed by cloud providers.17 Conversely, decreasing it can help if rate limiting is an issue.-parallelism.max_retries or retry_mode (e.g., AWS provider supports max_retries and retry_mode which can be set to standard or adaptive 132). The Azure provider also has retry options.77 The Google provider offers a batching block for some API calls to consolidate requests.79count and for_each: While essential for dynamic resource creation, overly complex logic within these loops can sometimes slow down plan generation. Google Cloud's best practices suggest preferring for_each over count for iterating over resources when the collection is a map or a set of strings, as for_each provides more stable resource addressing upon changes to the collection.16terraform plan phase. A large number of data sources, or data sources that query slow APIs, can significantly increase plan times.10 If a data source's arguments depend on attributes of managed resources that are not known until the apply phase, Terraform will defer reading that data source until apply, making the plan less definitive.105 Place data sources near the resources that reference them, or in a dedicated data.tf file if numerous.10Performance optimization in Terraform is not about a single tweak but a holistic approach encompassing code structure (module size, state splitting), efficient resource definitions, and understanding provider interactions.

Debugging complex Terraform HCL code with many modules and variables can be daunting.

TF_LOG=TRACE (most verbose) or TF_LOG=DEBUG, and TF_LOG_PATH=/path/to/terraform.log to direct logs to a file for easier analysis.60terraform console: Interactively test expressions, inspect variable values, and evaluate resource attributes without running a full plan/apply cycle.93 This is invaluable for understanding how Terraform interprets your code.terraform plan/apply -target=... to focus on a specific resource or module during debugging, but remember the caveats about state drift.17terraform state show <RESOURCE_ADDRESS> to view the attributes of a specific resource in the state, or terraform state pull to download and examine the entire remote state file (if necessary and with caution).25aws_security_group.A depends on aws_security_group.B, and B depends on A). Use terraform graph to visualize dependencies. Resolve by refactoring (e.g., using separate aws_security_group_rule resources instead of inline rules) or introducing intermediate resources.17terraform init -upgrade to update plugins or check .terraform.lock.hcl for pinned previous versions that might be incompatible.56terraform import or adjust naming.terraform validate and careful review of variable definitions (variables.tf) and .tfvars files are key. The TF_LOG=DEBUG output can also show variable values being processed. 135remote-exec or local-exec provisioners can fail. Debugging these often requires checking the logs on the target machine (for remote-exec) or the CI/CD agent output. Provisioners are generally discouraged as a last resort if the desired outcome cannot be achieved via native Terraform resources.16Successfully managing Terraform at scale is an ongoing journey, not a one-time setup. It requires a commitment to best practices across code structure, state management, automation, security, and testing. By adopting modular design with reusable Terraform modules discoverable via a module registry, implementing robust remote state management with locking, and leveraging Terraform workspaces appropriately for different environments, teams can lay a solid foundation.

Automating the Terraform workflow through a CD pipeline, complete with pull request status checks, automated terraform plan reviews, and policy enforcement using tools like Open Policy Agent, is crucial for maintaining speed and stability. This automation should extend to Terraform tests, ensuring that infrastructure changes are validated before deployment.

Addressing developer pain points such as slow Terraform operations, complex debugging, and managing dependencies across a large Terraform configuration requires a strategic approach. Techniques like state splitting, careful use of terraform_remote_state data sources, and understanding provider-specific behaviors (like API rate limits) are essential.

Ultimately, scaling Terraform effectively means empowering team members to contribute confidently and efficiently, ensuring that the entire infrastructure is reliable, secure, and maintainable. While tools like Terraform Cloud or other open-source tools and platforms can provide significant leverage by offering managed services for aspects like remote execution backends and policy checks, the principles discussed here remain vital. By focusing on these areas, organizations can harness the full power of HashiCorp Terraform to manage even the most complex cloud resources and cloud services at scale.